IT Cost Blind Spots – Where Most Leaders Fail To Look

IT Cost Blind Spots – Where Most Leaders Fail To Look

IT leaders love reports. They love dashboards. But still… millions get wasted every year in places no one is watching."

Because IT costs don't only live in budgets. They hide in processes, people, habits, and forgotten corners of your tech landscape.

## Introduction

IT leaders are under constant pressure to control costs, optimize spending, and demonstrate value. Budgets are scrutinized, spending is tracked, and financial reports are generated regularly. Yet despite all this attention, many organizations still lose thousands — if not millions — of dollars every year.

Why? Because IT costs don’t just sit neatly inside budgets and invoices. They hide. They blend into operations, processes, and behaviors. They quietly leak from forgotten tools, underused platforms, and legacy habits that nobody questions.

True IT cost leadership is not about looking harder at the budget sheet — it's about looking smarter across the entire IT landscape.

## The Usual Suspects: Where Everyone Looks

Most IT cost control efforts start (and often end) in the same predictable places:

- Software Licenses

- Hardware Assets

- Cloud Consumption Reports

- Vendor Contract Renewals

- Project Budgets

And while these areas are important, focusing only here creates a dangerous illusion of control. The real risks — and opportunities — lie beyond.

## The Real Blind Spots: Where Costs Hide

- 1. Idle SaaS Subscriptions

Teams sign up for tools. Projects end. People leave. But the subscriptions stay — quietly renewing every month.

- 2. Overlapping Tools Doing the Same Job

Different teams buy their own tools for similar tasks — file sharing, project management, messaging — creating both cost duplication and integration headaches.

- 3. Cloud Zombie Resources

Virtual machines running with no owner. Storage buckets left active. Old test environments forgotten. The cloud makes this easy — and expensive.

- 4. Maintenance Contracts Nobody Uses

Legacy systems or hardware with expensive support contracts — even if the system is rarely (or never) used.

- 5. Shadow IT

Tools and services adopted directly by business units without IT knowledge or governance — leading to untracked spending and compliance risks.

- 6. Data Storage Bloat

Keeping everything forever — emails, logs, backups — increases storage needs and costs without adding value.

## Why Leaders Miss These Blind Spots

- Lack of Visibility: No clear view across the entire IT environment.

- Fragmented Ownership: No single point of accountability for many tools or resources.

- Siloed Teams: Decisions made independently without central coordination.

- Focus on New Spend vs. Existing Waste: Budget reviews focus on "what's next" rather than "what's hanging around."

## How to Find & Fix IT Cost Blind Spots

- 1. Conduct Usage-Based Audits Regularly

Review actual tool and service usage quarterly. Eliminate what’s unused or underused.

- 2. Map Ownership Clearly

Every tool, subscription, or platform should have a clear business owner responsible for its cost and value.

- 3. Implement Shadow IT Detection

Use tools to detect unapproved SaaS usage across the organization.

- 4. Automate Idle Resource Alerts

Set policies and alerts in your cloud environment for unused or underutilized resources.

- 5. Review Contracts with Finance Annually

Don't let contracts auto-renew without a usage and value check.

- 6. Optimize Data Storage Policies

Apply smart retention policies. Archive strategically. Delete ruthlessly when appropriate.

## Conclusion

The future of IT cost optimization isn't just about cutting budgets — it's about cutting waste.

IT leaders who go beyond traditional budgeting and develop a culture of visibility, ownership, and proactive cost management will deliver the biggest long-term value to their organizations.

Remember: Every dollar wasted in a blind spot is a dollar stolen from innovation, growth, and future investments.

Find your blind spots. Close them. Reinvest the savings where they matter most.

Maximizing IT ROI – Smart Cost-Saving Strategies Beyond Budget Cuts

Maximizing IT ROI – Smart Cost-Saving Strategies Beyond Budget Cuts

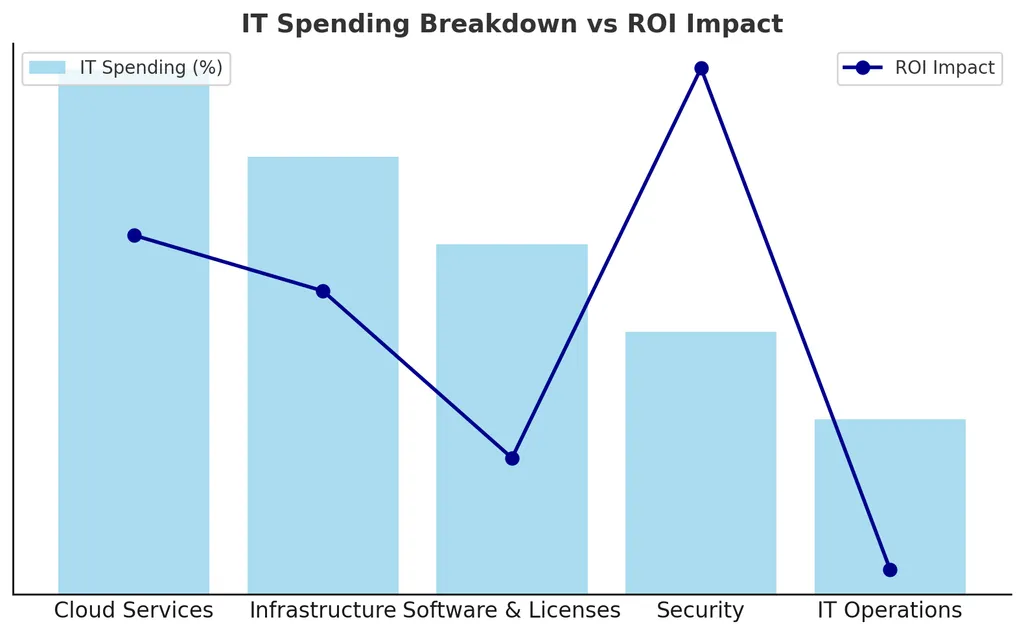

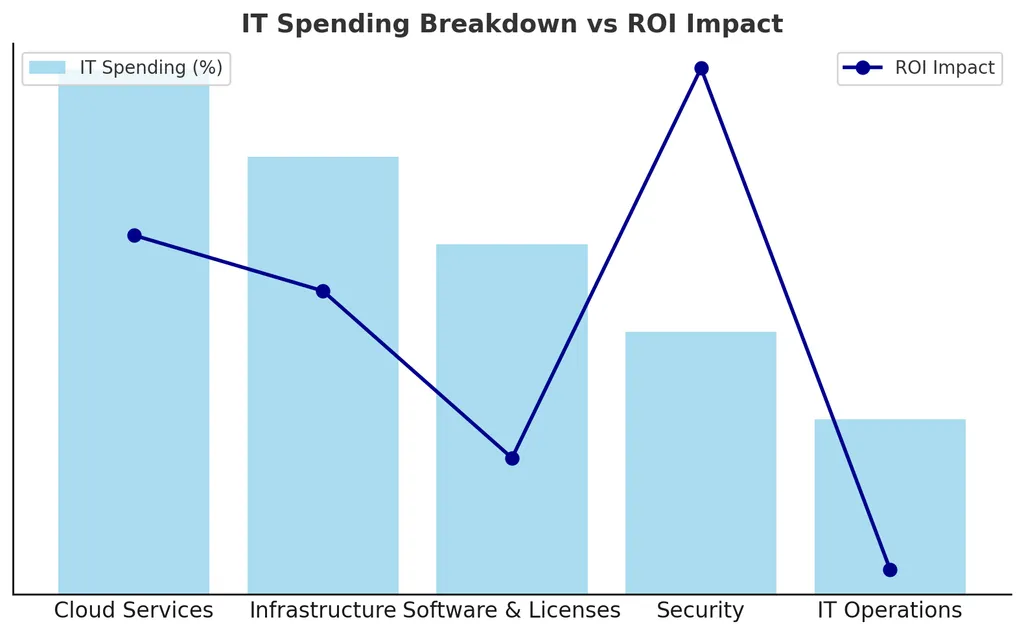

Cost-cutting alone doesn’t equate to long-term IT savings. Instead, businesses must focus on maximizing ROI by optimizing operations, vendor contracts, cloud workloads, and asset lifecycles. A data-driven approach to IT spending ensures efficiency without compromising service quality or innovation.

## Introduction

As IT budgets face increasing scrutiny, CIOs and IT leaders must rethink cost-saving strategies beyond traditional budget cuts. The challenge isn’t just to spend less but to spend smarter—ensuring every dollar invested in IT delivers maximum business value. This blog explores practical strategies to optimize IT investments without sacrificing performance.

## 1. Optimizing IT Operations Without Downgrading Services

Many organizations cut IT budgets reactively, leading to reduced service quality, security risks, and operational inefficiencies. Instead, businesses should:

- Automate routine tasks to reduce manual workload (AIOps, RPA).

- Consolidate IT tools & platforms to avoid redundancy.

- Invest in proactive monitoring to prevent costly outages and downtime.

## 2. Cloud Cost Management Through Smarter Workload Distribution

Cloud costs are among the biggest IT expenditures. Instead of simply reducing cloud services, organizations should optimize workload placement and resource allocation:

- Leverage cloud cost analysis tools (FinOps) to identify wastage.

- Use reserved instances and auto-scaling to match real-time demand.

- Reassess multi-cloud vs. hybrid-cloud strategies based on cost efficiency.

## 3. Strategic Vendor Negotiations & Contract Optimization

Vendor contracts often include hidden costs and long-term lock-ins. IT leaders can negotiate better deals by:

- Benchmarking vendor pricing against industry standards.

- Seeking performance-based contracts (pay-for-value models).

- Consolidating vendor agreements for better pricing power.

## 4. Enhancing IT Asset Utilization & Lifecycle Management

IT hardware and software often get replaced prematurely, leading to unnecessary expenses. A structured asset lifecycle strategy can reduce costs:

- Extend hardware life with predictive maintenance.

- Leverage refurbished enterprise-grade equipment where feasible.

- Implement IT asset tracking to avoid underutilized resources.

## 5. Data-Driven Decision-Making for IT Budget Efficiency

Cost-saving decisions should be based on data, not assumptions. Organizations should:

- Use analytics to track IT spending trends & ROI.

- Identify underperforming investments and reallocate funds.

- Develop a cost-optimization framework tied to business goals.

## Conclusion

The future of IT cost optimization is about balancing efficiency with innovation. By focusing on smart investments, strategic vendor management, and data-driven insights, IT leaders can ensure their budgets are optimized without compromising growth, security, or service quality.

The Rise of Edge Computing – How It’s Transforming Data Centers

The Rise of Edge Computing – How It’s Transforming Data Centers

Traditional data centers are under pressure as businesses demand faster processing, lower latency, and real-time analytics. Edge computing is reshaping the landscape by decentralizing processing, reducing bandwidth costs, and enhancing system responsiveness. Understanding its impact is crucial for IT decision-makers.

## Introduction

As AI, IoT, and real-time applications become mainstream, traditional cloud data centers face scalability challenges. Edge computing offers a decentralized alternative, processing data closer to the source to reduce latency and bandwidth dependency.

## 1. What is Edge Computing?

- ✅ Decentralized processing near data sources (IoT devices, sensors, etc.).

- ✅ Reduces latency for real-time analytics and automation.

- ✅ Minimizes bandwidth costs by reducing cloud data transfers.

## 2. Why Edge Computing is Critical for Modern IT Infrastructure

- 📌 Faster Decision-Making – Critical for industries like healthcare, manufacturing, and - smart cities. -

- 📌 Lower Bandwi- dth Costs – Reduces dependency on cloud-based data transfers.

- 📌 Enhanced Security – Processes sensitive data locally rather than in the cloud.

## 3. How Edge Computing Transforms Data Centers

- ✅ Hybrid Data Center Models – Combining centralized cloud with edge nodes.

- ✅ AI & IoT Integration – Processing massive real-time data streams.

- ✅ Scalability & Reliability – Expanding capacity without latency issues.

## Conclusion

Edge computing isn’t replacing traditional data centers—it’s augmenting them. Future-ready IT infrastructures must incorporate edge architectures to ensure agility, performance, and cost efficiency.

The Role of IT Project Management in Business Success

The Role of IT Project Management in Business Success

Effective IT project management is essential for delivering successful digital transformations, infrastructure upgrades, and software development. Without proper methodologies, projects risk budget overruns, delays, and misalignment with business objectives. This blog highlights the importance of IT project management, its benefits, and the challenges organizations face in execution.

## Introduction

As businesses rely more on technology, IT projects have become central to growth and operational efficiency. However, many projects fail due to poor planning, lack of resources, or weak execution strategies. Implementing strong project management frameworks ensures on-time, on-budget, and high-quality project delivery.

## Benefits

- Improved Execution & Delivery: Clear timelines, roles, and milestones reduce project risks.

- Better Resource Allocation: Ensures teams work efficiently and within budget.

- Alignment with Business Goals: Ensures projects contribute to overall company success.

- Risk Mitigation: Identifying potential failures early prevents costly rework.

## Challenges

- Scope Creep: Uncontrolled expansion of project requirements.

- Lack of Communication: Misalignment between stakeholders leads to project delays.

- Budget Constraints: Ensuring financial efficiency without compromising project quality.

## Conclusion

Strong IT project management ensures strategic execution, better efficiency, and high-value delivery. By adopting the right methodologies, businesses can maximize success. Stay tuned for practical insights on IT project methodologies in upcoming posts!

Enterprise Architecture - The Silent Force Driving Organizational Efficiency

Enterprise Architecture - The Silent Force Driving Organizational Efficiency

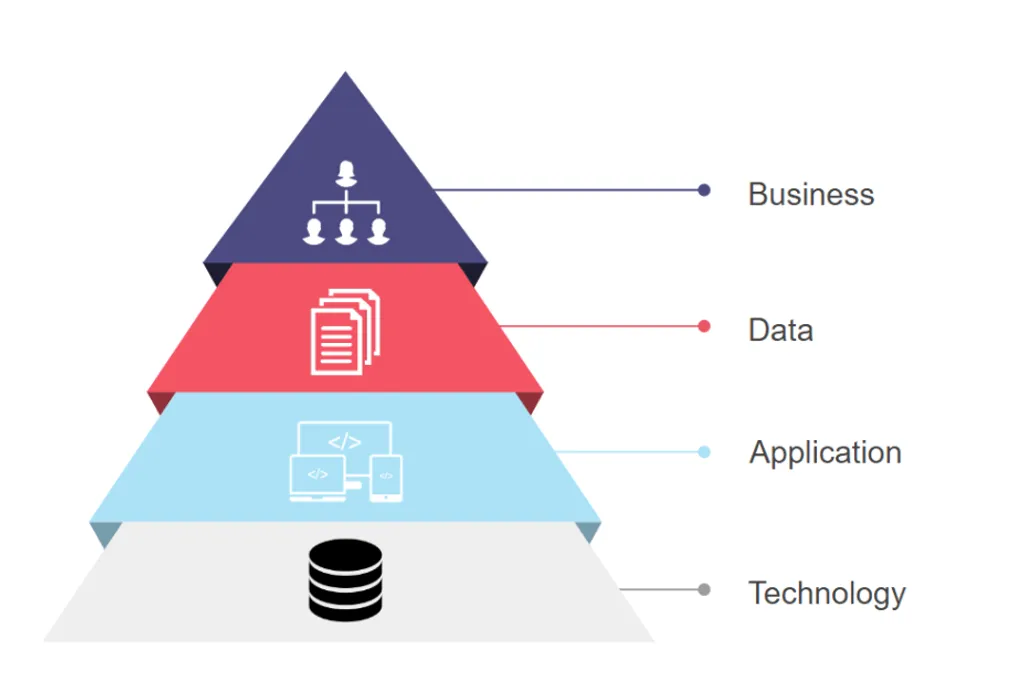

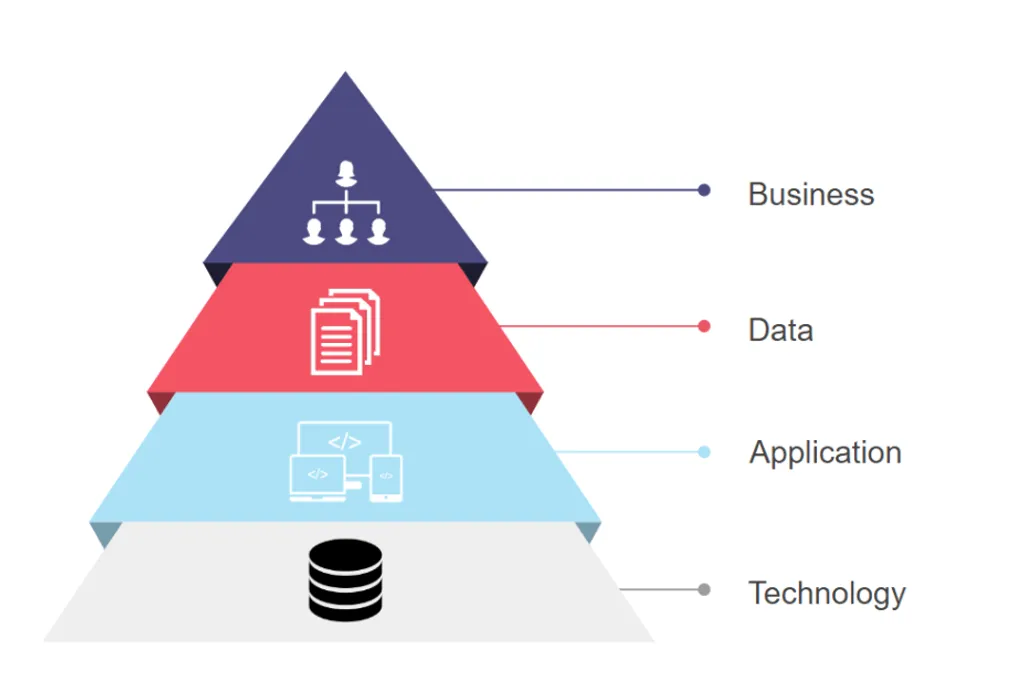

Enterprise Architecture (EA) is a strategic enabler that aligns technology, processes, and resources with business goals. By enhancing visibility, promoting resource reusability, and clarifying ownership, EA drives operational efficiency, cost savings, and innovation. This article explores the critical role of EA in modern organizations, the benefits it delivers, common challenges, and how to embed it as a core component of business strategy.

## Introduction

In today’s fast-paced digital landscape, organizations are constantly seeking ways to streamline operations, cut costs, and accelerate innovation. Yet many overlook a critical enabler that works quietly in the background: Enterprise Architecture (EA). Far beyond technical frameworks and IT blueprints, EA plays a strategic role in maximizing resource visibility, eliminating duplication, and mapping clear ownership across the enterprise. Its true power lies in connecting business goals with technology investments, ensuring that every move is intentional, efficient, and value-driven.

## The Role of Enterprise Architecture

### 1. Enhancing Resource Visibility

Enterprise Architecture brings all organizational assets — systems, data, processes, capabilities — into a single, clear view. By doing so, decision-makers can identify underused assets, recognize opportunities for optimization, and make data-driven investment choices. Visibility not only reduces waste but also empowers faster, smarter decision-making.

### 2. Promoting Reusability and Eliminating Duplicates

In many organizations, different departments often end up purchasing or developing redundant systems because of siloed operations. EA acts as the bridge that connects these silos, promoting the reusability of existing platforms, components, and services. This prevents unnecessary duplication, saving significant costs and reducing operational complexity.

### 3. Clarifying Resource Ownership

Enterprise Architecture provides a clear mapping of resource ownership across the organization. It defines who is responsible for what, creating transparency and accountability. This clarity supports better governance, accelerates problem resolution, and ensures that resources are managed proactively rather than reactively.

## Business Benefits

When implemented effectively, EA delivers a range of business benefits:

- Cost Savings: By eliminating duplicate purchases and optimizing resource usage.

- Operational Efficiency: By aligning technology with business strategy and simplifying processes.

- Innovation Acceleration: By freeing up resources that can be reallocated to growth initiatives.

- Risk Reduction: By ensuring better compliance, security, and continuity through clear ownership and governance.

EA transforms from a back-office function into a strategic powerhouse that fuels competitive advantage.

## Challenges and Misconceptions

Despite its value, EA often faces resistance due to misconceptions. Some view it as purely technical or overly bureaucratic. Others mistakenly see it as an optional luxury rather than a strategic necessity. Successful EA initiatives address these misconceptions by:

- Communicating the business value of EA in clear terms.

- Integrating EA into everyday decision-making, not just long-term planning.

- Keeping EA agile and responsive to changing business needs.

Enterprise Architecture must be seen as a living practice — evolving alongside the organization, not a one-time project.

## Conclusion

In a world where agility, efficiency, and strategic foresight are critical for survival, Enterprise Architecture emerges as a silent but powerful driver of success. It connects the dots between resources, technology, and business goals, ensuring that organizations not only survive but thrive in the face of constant change.

Investing in Enterprise Architecture is not just an IT decision — it is a strategic business imperative.

Ready to unlock the true potential of Enterprise Architecture? Start by making it a core part of your business strategy today.

Why Microsoft Abandoned Its Successful Underwater Data Center Project

Why Microsoft Abandoned Its Successful Underwater Data Center Project

In a surprising move, Microsoft has decided to retire Project Natick—its ambitious, futuristic underwater data center initiative. Despite its promising performance and environmental benefits, the project will not see a commercial rollout. This post explores the history of Project Natick, its outcomes, and why Microsoft ultimately chose to move on.

## Introduction

In 2015, Microsoft launched one of the most daring infrastructure experiments in data center history: Project Natick, an underwater data center initiative designed to test whether data centers submerged in the ocean could be more sustainable, efficient, and reliable than their land-based counterparts. Eight years later, the project has officially been shelved—despite proving successful on many fronts.

So what went right—and why did Microsoft walk away?

## The History of Project Natick

Project Natick began as a response to multiple challenges:

- The rising demand for low-latency data delivery.

- Growing concerns around energy usage and sustainability in data centers.

- The desire to deploy data centers closer to coastal population hubs.

### Phase 1 (2015):

Microsoft submerged a prototype off the coast of California. This capsule operated for 105 days and proved the feasibility of submersion without disruption.

### Phase 2 (2018-2020):

A larger vessel was placed 117 feet deep off the coast of Orkney Islands, Scotland. This version contained 864 servers and 27.6 petabytes of storage and was fully powered by renewable energy from wind and tidal sources.

The capsule operated for over two years without issues, outperforming traditional data centers in terms of reliability and sustainability.

## Outcomes of the Project

Project Natick was not a failed experiment—in fact, it was a remarkable success.

### ✅ Higher Reliability

Microsoft reported that the underwater data center had one-eighth the failure rate of its land-based counterparts. The reduced exposure to human error, corrosion, and temperature fluctuation contributed to this reliability.

### ✅ Environmental Efficiency

- Powered entirely by renewable energy.

- Naturally cooled by seawater, eliminating the need for traditional HVAC systems.

- Reduced carbon footprint and energy costs.

### ✅ Modular & Rapid Deployment

- Data centers could be manufactured, shipped, and deployed within 90 days.

- Ideal for regions with limited space or infrastructure.

### ✅ Proximity to Coastal Populations

- Almost 50% of the global population lives near the coast.

- Underwater data centers could reduce latency and improve connectivity.

## So Why Did Microsoft Abandon It?

Despite its many advantages, Microsoft quietly announced it would not pursue commercial-scale underwater data centers.

Here’s why:

### ❌ Scalability Limitations

- While modular, the pods had a fixed capacity and weren’t easily upgradable or serviceable.

- Scaling would require deploying many units in marine environments, adding logistical and environmental complexity.

### ❌ Maintenance Challenges

- Physical repairs meant bringing the entire unit back to the surface.

- Long-term maintenance and lifecycle planning were not as flexible as with land-based facilities.

### ❌ Regulatory and Environmental Hurdles

- Deploying in coastal waters requires governmental and environmental permissions.

- Potential ecological concerns and jurisdictional red tape presented barriers to global rollout.

### ❌ Cloud Strategy Shift

- Microsoft is doubling down on AI, hybrid cloud, and edge computing, which favor more dynamic, accessible infrastructure.

- Underwater pods, while innovative, don’t align well with the rapid scaling needs of AI model training and inference.

## Conclusion

Project Natick may be over, but it left a lasting impact. It proved that sustainable, resilient, and low-maintenance data centers are possible—even underwater. It offered insight into how modular design, renewable power, and remote operations can shape future infrastructure.

As Microsoft pivots toward AI and global edge computing, Natick will be remembered as an inspiring leap toward sustainable cloud infrastructure. Sometimes, even the most successful pilots don’t make it to production—but their lessons carry forward.

IT Cost Blind Spots – Where Most Leaders Fail To Look

IT Cost Blind Spots – Where Most Leaders Fail To Look